Docker & Container Security – Deployment of GenAI on Docker

Deployment of GenAI on Docker

As of my last update, GPT-3 (Generative Pre-trained Transformer 3) by OpenAI is not directly available for deployment on individual Docker containers due to its complexity and resource requirements. However, OpenAI provides API access to GPT-3 for developers to integrate into their applications. Here’s a step-by-step guide on how you can set up GPT-3 API access within a Docker container:

- Sign up for OpenAI API Access:

- Visit the OpenAI website and sign up for API access. You will need to provide information about your intended use case and agree to their terms of service.

- Get API Key:

- After approval, you will receive an API key from OpenAI. This key is necessary for authenticating requests to the GPT-3 API.

- Choose a Programming Language:

- Decide which programming language you want to use to interact with the GPT-3 API. Popular choices include Python, JavaScript, and others.

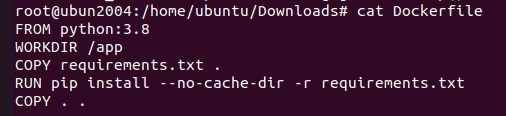

- Create a Dockerfile:

- Write a Dockerfile that specifies the environment and dependencies needed for your chosen programming language. Include any libraries or packages required for making HTTP requests and handling JSON responses.

- Install OpenAI Library:

- Install the OpenAI library for your chosen programming language. This library provides a convenient interface for interacting with the GPT-3 API.

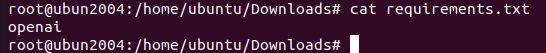

- For Python, you can use openai library:

- Install the OpenAI library for your chosen programming language. This library provides a convenient interface for interacting with the GPT-3 API.

In your requirements.txt file:

Set Up API Key:

Within your Docker container or application code, set up the API key provided by OpenAI as an environment variable.

export OPENAI_API_KEY=<your_api_key>

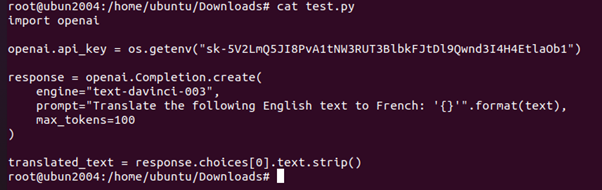

- Write Application Code:

- Write code in your chosen programming language to interact with the GPT-3 API. This code will send prompts to the API and process the responses.

- Example Python code:

- Write code in your chosen programming language to interact with the GPT-3 API. This code will send prompts to the API and process the responses.

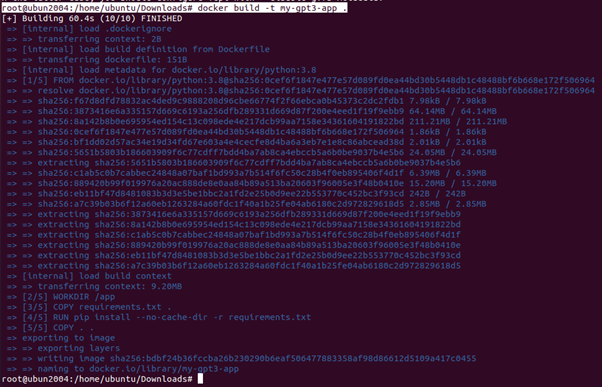

- Build Docker Image:

- Build a Docker image using the Dockerfile created in step 4.

root@ubun2004:/home/ubuntu/Downloads# docker build -t my-gpt3-app .

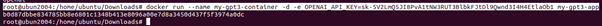

- Run Docker Container:

- Run a Docker container from the image you built in step 8, ensuring to pass any necessary environment variables.

root@ubun2004:/home/ubuntu/Downloads# docker run –name my-gpt3-container -d -e OPENAI_API_KEY=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 my-gpt3-app

- Test Application:

- Test your Docker containerized application to ensure that it can successfully interact with the GPT-3 API and generate responses as expected.

- Monitor and Maintain:

- Monitor the performance and usage of your Docker container and application over time. Implement logging and monitoring solutions to track any errors or issues.

- Regularly update the Docker image and dependencies to incorporate security patches and improvements.

Additional Considerations:

- Resource Allocation: Consider using the –cpus and –memory options with docker run to allocate specific CPU cores and memory limits for your GenAI container.

- Security: For production use, explore best practices for hardening your Docker environment and container security.

Remember:

- These are general steps, and the specifics may vary depending on your chosen GenAI application.

- Consult the documentation for your specific GenAI application or chosen pre-built image for detailed usage instructions.

@SAKSHAM DIXIT